What we have learned about LLM agents over 1.5y building an AI data and analytics assistant

Introduction

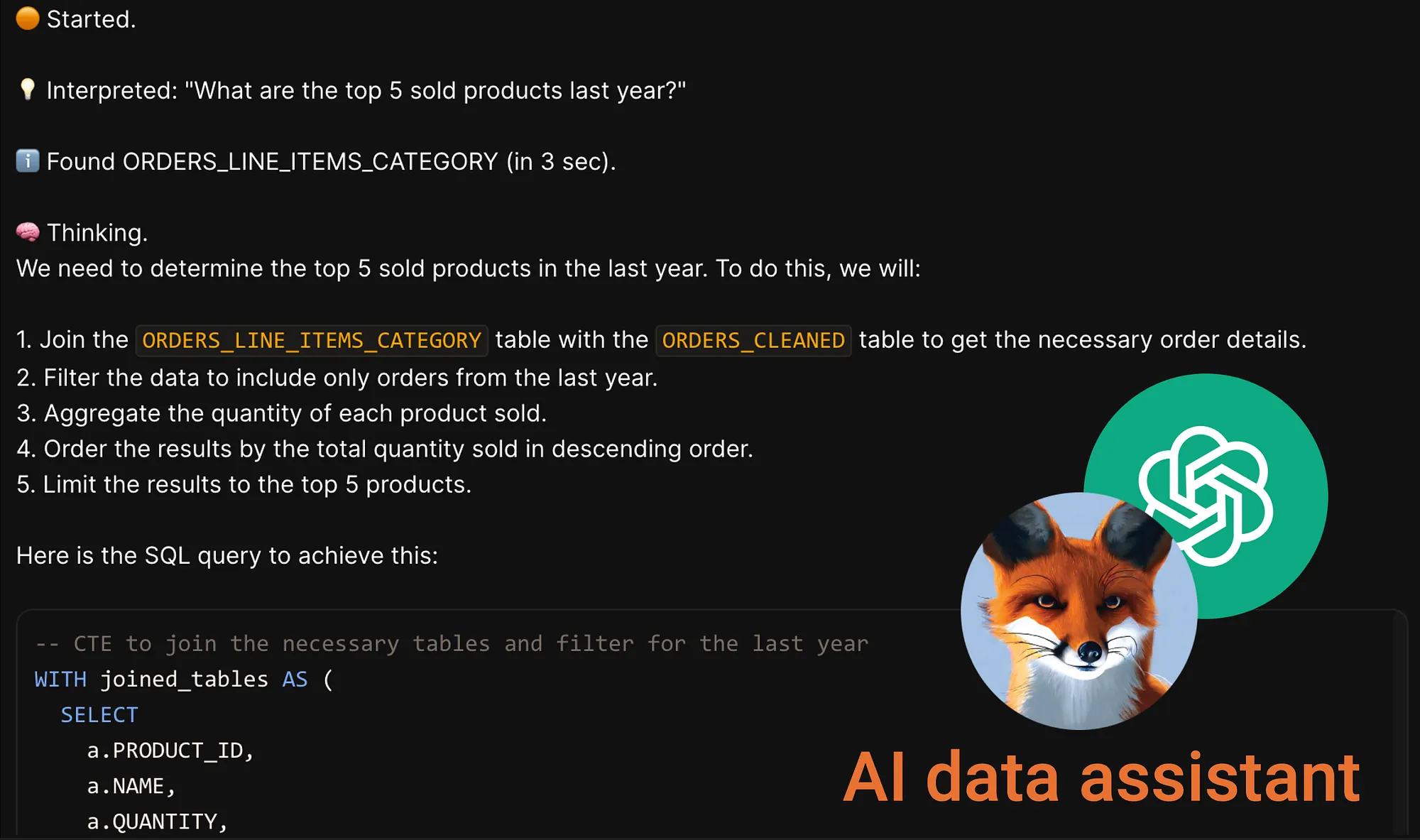

Our journey with Dot, an AI agent designed to help business stakeholders tackle data-related questions on Slack, has been quite the adventure. We've bumped into quite a few challenges along the way, but we've also picked up some valuable insights. Dot uses large language models (LLMs) to chat with users, dig through enterprise data, and handle some pretty complex analytical tasks. By pulling in metadata from our data warehouses — like schema info, documentation, join relationships, and query history — Dot can figure out questions, hunt for relevant data, whip up SQL statements, and even create visualizations. Here, I want to share some of the things we've learned over the past 1.5 years.

Paradigms of Developing AI Applications

When it comes to making the most of LLMs, we discovered three main approaches:

- Chat-based interactions: The simplest way, where users just chat with the AI.

- Workflow automation: Automating routine tasks, from writing documentation to analyzing customer churn.

- Agent-based systems: Giving the AI a goal and letting it figure out the steps, tools, and tasks to get there.

These methods overlap a bit but offer different ways to weave AI into software systems.

Misconceptions about AI

A lot of folks think of AI as some sort of life form, but it's really just advanced technology. Today's AIs, especially LLMs, are just super smart tools that follow instructions. While future AIs might get more life-like, right now, they're just really sophisticated software handling human-like inputs.

Reflections on the Nature of Intelligence and Its Components

a. Task-Level Intelligence

LLMs show that intelligence can be task-specific and separate from having goals. They mainly aim to satisfy user requests rather than having their own goals.

b. Goal Orientation

Humans have their own goals and egos driving them, but LLMs are built to achieve user-defined objectives without any personal intent.

c. Memory

LLMs have a sort of memory from their training data and context windows, but they don't continuously learn like humans do. This makes it tricky to create systems that remember and adapt over time as humans do.

Key Practical Learnings in Developing an AI Agent

1. Reasoning is More Important Than Knowledge

Learning: Effective AI systems prioritize reasoning over memorization.

Technical Takeaway: LLMs should use their reasoning capabilities to interpret and manipulate data rather than relying on extensive memory storage.

Example with Dot: When Dot generates SQL queries, it uses an internal understanding of database structures, filling in specific details from context rather than recalling predefined templates.

2. Fine-Tuning vs. Prompt Engineering

Learning: Both fine-tuning and prompt engineering are valuable, but their effectiveness depends on the context.

Technical Takeaway: Fine-tuning can enhance performance and reduce costs, but prompt engineering remains crucial for flexibility and adaptability.

Example with Dot: We found that fine-tuning Dot for specific enterprise tasks improved response time and consistency, while prompt engineering ensured that Dot could adapt to different models and data sources.

3. Crucial Role of the Context Window

Learning: The size and management of the context window significantly impact the performance of LLMs.

Technical Takeaway: While larger context windows allow for more data, they can also degrade model performance if not handled correctly. Effective benchmarking is essential.

Example with Dot: By keeping context windows within 32,000 tokens, we ensured Dot maintained high performance in generating accurate SQL queries and visualizations.

4. Efficient Information Representation

Learning: Clear and concise information representation is critical for effective AI interactions.

Technical Takeaway: Balancing the amount of information provided to the LLM is key — too little leads to ambiguity, too much to overload.

Example with Dot: We developed a method to present database schemas and business logic concisely, allowing Dot to generate precise and relevant queries without overwhelming the model.

5. Model Selection

Learning: Using diverse models can improve robustness and future-proofing.

Technical Takeaway: Testing prompts across different models ensures generalizability and prevents overfitting to a single provider's strengths.

Example with Dot: We validated Dot's prompts on both OpenAI and Anthropic models, ensuring consistent performance and adaptability to future model updates.

6. Human-Centric Design

Learning: Effective AI systems integrate seamlessly into human workflows and environments.

Technical Takeaway: Designing AI systems that align with existing user interfaces and practices enhances usability and productivity.

Example with Dot: By embedding Dot in Slack, email, and Teams channels, we ensured that users could interact with the AI within their familiar communication tools, making the experience more intuitive and efficient.

Related reading:

Conclusion

Our experience developing Dot has underscored the importance of viewing AI as a tool designed to augment human capabilities, especially for analysts. By focusing on reasoning, fine-tuning, context management, information representation, diverse model usage, and user-centric design, we have built a robust and effective AI agent. While the landscape of AI technology is rapidly evolving, these foundational insights guide our continuous improvement and innovation, helping us create systems that not only perform complex tasks but also enhance productivity and add a bit of fun to data work. :)

Theo Tortorici

Theo writes about AI-powered analytics, data tools, and the future of business intelligence at Dot.