What happens when a company processing 220 TB of data daily decides that dashboards aren't enough anymore?

DoorDash faced a problem familiar to every fast-growing data organization: knowledge was everywhere and nowhere at the same time. Experimentation results lived in one system, metrics in another, dashboards in Sigma, documentation in wikis, and critical context buried in Slack threads. Answering a business question meant a scavenger hunt across five different tools.

Their solution? An internal agentic AI platform that evolved from simple automation workflows to sophisticated multi-agent "swarms"—and the lessons from their journey offer a blueprint for any data team considering similar investments.

The scale of DoorDash's data challenge is staggering:

With this scale came an inevitable problem: answering complex business questions required significant context-switching—searching wikis, asking in Slack, writing SQL, and filing Jira tickets. An analyst investigating a trend might spend more time finding data than analyzing it.

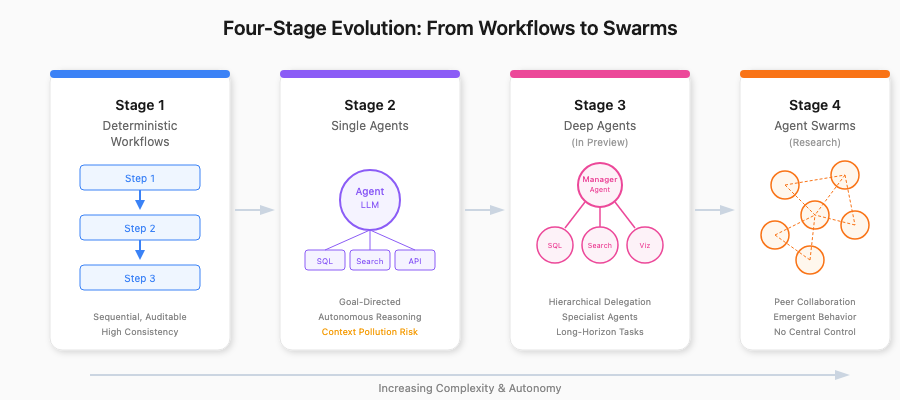

DoorDash didn't try to build an autonomous AI analyst on day one. Instead, they mapped out four distinct architectural stages, each building on the last:

The foundation. Think of these as "factory assembly lines"—pre-defined, sequential steps optimized for repeatability. One workflow automates Finance and Strategy reporting by pulling data from Google Docs, Sheets, Snowflake, and Slack to generate business operations summaries and year-over-year analyses.

When to use: High-stakes tasks where consistency and auditability are paramount. Every step is logged, every output is traceable.

The first step toward autonomy. A single agent receives a goal, reasons about how to achieve it, and executes using available tools. Uber's QueryGPT exemplifies this stage, decomposing text-to-SQL into four focused agents. DoorDash's DataExplorer agent demonstrates this—it can interpret ambiguous requests like "Investigate the drop in conversions in the Midwest last week" by:

The limitation: Context pollution. As agents perform more steps, their context window fills with intermediate thoughts, degrading reasoning quality and limiting their ability to handle long-running tasks.

To overcome single-agent limitations, DoorDash introduced "deep agents"—multiple agents organized hierarchically to manage complex, long-horizon tasks. The core principle is specialization and delegation: a manager agent coordinates specialist agents, each focused on a specific capability.

Use case: Market-level strategic planning that requires task decomposition across multiple data sources and analysis types.

Dynamic networks of peer agents collaborating asynchronously without centralized control. Unlike hierarchical deep agents, swarms operate through distributed intelligence where no single agent has the complete picture, but coherent solutions emerge through local interactions. DoorDash compares this to ant colonies rather than corporate org charts.

The challenge: Governance and explainability. Emergent behavior makes it difficult to trace exact decision paths.

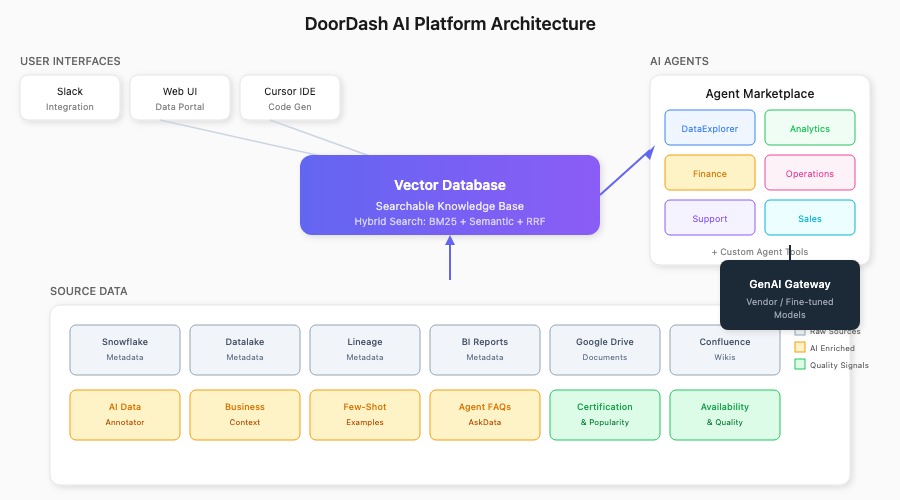

At the heart of the platform is a multistage search engine built on a vector database. DoorDash combines:

This hybrid approach is critical because enterprise knowledge spans wikis, experimentation results, and thousands of dashboards—no single search paradigm captures everything.

Their "secret sauce" for accurate SQL: a custom DescribeTable tool that provides agents with compact, engine-agnostic column definitions enriched with pre-cached example values.

This pre-caching dramatically improves WHERE clause accuracy. When an agent needs to filter by country or product type, it has concrete examples—not just column names—to reference. The end-to-end flow:

Search → DescribeTable → SQL Generation → Multi-stage Validation → ResponseTrust is built through transparency and reliability. DoorDash implemented a multi-layered guardrail system:

Platform-wide guardrails:

Agent-specific guardrails:

For ongoing quality monitoring, DoorDash uses an LLM Judge that assesses performance across five dimensions:

This automated oversight is what they consider non-negotiable for deploying AI into critical business functions.

Customer Support:

Search Evaluation:

Safety Moderation (SafeChat):

Finance Operations:

DoorDash isn't building in isolation. Their architecture embraces two emerging standards:

Model Context Protocol (MCP): Standardizes how agents access tools and data, ensuring secure, auditable interactions with internal knowledge bases. OpenAI's internal platform demonstrates MCP at scale, connecting 3,500 users to 600 petabytes of data. This is the bedrock of single-agent capabilities.

Agent-to-Agent Protocol (A2A): Developed by Google and donated to the Linux Foundation, A2A standardizes inter-agent communication. DoorDash sees this as key to unlocking deep agents and swarms at scale, enabling agent discovery, asynchronous state management, and lifecycle events.

A critical insight: platform power means nothing without accessibility.

DoorDash's agents integrate directly with:

This multi-surface strategy dramatically accelerates decision-making by eliminating the productivity drain of tool-switching.

"Building a robust multi-agent system is a journey of increasing complexity and capability. You can't jump straight to sophisticated, multi-agent collaboration; you must first build a solid foundation."

Advanced multi-agent designs only amplify inconsistencies in underlying components. DoorDash spent significant time perfecting single-agent primitives—schema-aware SQL generation, multistage document retrieval—before attempting agent collaboration.

DoorDash doesn't try to replace one paradigm with another. Their approach:

Different problems need different tools.

Every production AI system needs:

The shift from single agents to deep agents was driven by a practical limitation: as agents perform more steps, their context fills with intermediate reasoning, degrading quality and increasing costs. Hierarchical delegation keeps each agent's context focused.

For deep agents and swarms, a persistent workspace—more than just a virtual file system—allows agents to create artifacts that others can access hours or days later. This enables collaboration on problems too large for any single agent's context window.

vs. Databricks/Snowflake AI Assistants:

Platform vendors like Databricks (Agent Bricks) and Snowflake (Intelligence) offer point solutions for querying data in natural language. DoorDash's approach is more ambitious—a unified cognitive layer across all enterprise knowledge, not just the data warehouse.

vs. Generic RAG Systems:

Most RAG implementations retrieve documents and generate answers. DoorDash adds schema-aware SQL generation, pre-cached examples for filtering accuracy, multi-stage validation, and hierarchical agent delegation—a significantly more sophisticated stack. Airbnb's 13-model ensemble uses comparable validation patterns.

vs. Off-the-Shelf Agent Frameworks:

Frameworks like LangGraph provide building blocks, but DoorDash's implementation includes enterprise-specific capabilities: RRF-based hybrid search tuned for their data, custom lemmatization for table names, and guardrails integrated with their governance requirements. For a contrasting philosophy, Vercel's d0 removed 80% of tools and achieved 100% success with just file operations and bash.

DoorDash's phased approach continues:

The most interesting work—swarms of agents solving complex, real-time logistics challenges—is still on the research frontier. But the foundation is in place.

For data teams watching from the sidelines, the message is clear: the transition from dashboards to intelligent data platforms isn't theoretical anymore. Companies like DoorDash are building it today, learning from failures, and sharing the blueprint.

The question isn't whether AI will transform how organizations access data. It's whether you'll be ready when it does.