When Airbnb's CEO Brian Chesky decided to tackle customer support with AI, he chose what he calls "the hardest problem" - high stakes, real-time responses, and zero tolerance for hallucination. The result is a system built on 13 different models that has reduced human agent contact by 15% and cut agent manual processing time by 13%. Here's what data leaders can learn from their approach.

Before diving into the solution, it's worth understanding what Airbnb was up against. Their original Automation Platform (v1) relied on rigid, predefined workflows - the kind where every possible conversation path had to be manually mapped out in advance.

Sound familiar? Most enterprise AI systems start here. It's safe, predictable, and completely unmaintainable at scale.

The limitations became obvious:

Airbnb needed something smarter - but not so smart that it would hallucinate refund policies or make up reservation details.

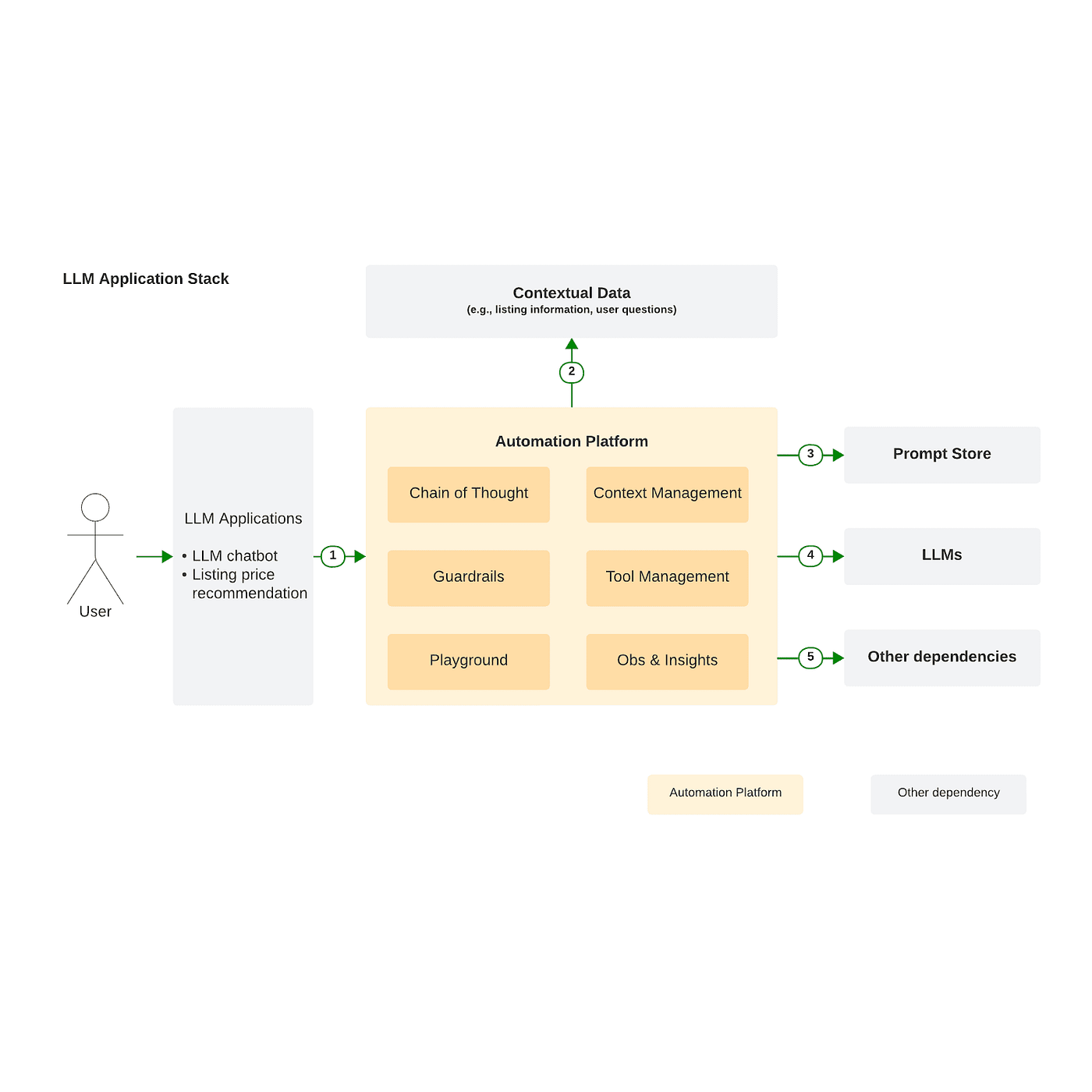

Airbnb's key insight was that the choice between "traditional ML" and "LLMs" is a false dichotomy. Their Automation Platform v2 combines both - using LLMs where they excel (natural language understanding, flexible reasoning) while falling back to deterministic workflows where precision is non-negotiable.

The platform has three major components:

1. Chain of Thought Workflow Engine

Airbnb implemented Chain of Thought (CoT) as an actual workflow system, not just a prompting technique. The flow works like this:

2. Tools as the Interface to Reality

Here's where Airbnb's platform gets interesting. Rather than letting LLMs generate arbitrary outputs, they constrain them to selecting and invoking pre-defined tools - essentially treating the LLM as a reasoning engine that decides which action to take, not how to execute it.

Tools include:

Each tool has a unified interface and runs in a managed execution environment. This means the LLM can decide "we need to check this reservation" but the actual database query runs through validated, tested code.

3. Guardrails Framework

This is Airbnb's answer to the hallucination problem. DoorDash achieves 90% hallucination reduction with comparable multi-stage validation. The Guardrails Framework monitors all LLM communications and can execute multiple validation checks in parallel. Uber's QueryGPT uses similar agent decomposition for text-to-SQL, splitting tasks across four specialized agents.

The architecture allows engineers from different teams to create reusable guardrails:

The counterintuitive finding: a smaller, fine-tuned model outperformed larger models in production because latency matters as much as accuracy. A brilliant answer that takes 46 seconds is worse than a good answer in 4.5 seconds.

Their fine-tuned Mistral-7B with Chain of Thought achieved a 13% reduction in manual processing time - compared to a 3% increase with larger models due to latency.

One of Airbnb's most transferable innovations is their Intent, Context, Action (ICA) format for representing business knowledge.

The problem: Enterprise workflows are written for humans with specific training. They're full of colloquial language, complex nested conditions, and implicit assumptions. LLMs struggle to parse them reliably.

The solution: Transform all workflows into a structured ICA format:

The format change alone provided substantial accuracy gains before any model fine-tuning:

This suggests that how you represent knowledge to LLMs matters as much as which model you use.

Airbnb doesn't rely on a single model. Their production system uses 13 different models, each selected for specific strengths:

Why 13 models? Different tasks have different requirements:

Perhaps the most instructive lesson is where Airbnb deliberately limits LLM involvement:

As their engineering team notes: "It's more suitable to use a transition workflow instead of LLM to process claims that require sensitive data and strict validations."

This isn't a failure of LLMs - it's mature engineering judgment about where different technologies belong.

Fine-tuned Mistral-7B beat larger models in production because it answered in 4.5 seconds instead of 46. When your users are waiting for help, response time matters as much as response quality.

Takeaway: Benchmark your models on latency, not just accuracy. A 10% accuracy drop might be worth a 90% latency improvement.

The ICA format provided 13-35% accuracy improvements before any fine-tuning. Restructuring how you present information to LLMs is often higher ROI than model optimization.

Takeaway: Audit how your business knowledge is structured. Complex, nested documents should be transformed into clear Intent-Context-Action formats.

By constraining LLMs to select from predefined tools rather than generate arbitrary outputs, Airbnb maintains control while gaining flexibility. The LLM reasons about what to do; validated code handles how to do it.

Takeaway: Design your agent architecture around tool selection, not open-ended generation. Define clear interfaces and execution environments for each capability.

Airbnb's guardrails run in parallel during every LLM interaction - content moderation, tool validation, response checking. This isn't bolted on; it's designed in.

Takeaway: Plan your safety mechanisms from the start. Design for parallel execution so guardrails don't become latency bottlenecks.

The best results came from combining LLM reasoning with deterministic workflows. Use LLMs for natural language understanding and flexible reasoning. Use traditional systems for validation, transactions, and policy enforcement.

Takeaway: Don't try to replace everything with LLMs. Identify where each technology's strengths align with your requirements.

Airbnb isn't replacing their customer support organization with AI. They're making every human agent more effective through AI augmentation. The 13% reduction in manual processing time means agents can handle more complex cases, not that Airbnb needs fewer agents. Netflix's LORE prioritizes explainability over raw capability, validating that user trust often matters more than accuracy.

This is the pattern that works: AI handles the routine, surfaces the relevant, and recommends the next action. Humans handle the exceptions, make the judgment calls, and build the relationships.

For data teams considering similar investments, Airbnb's playbook offers a roadmap:

The future of enterprise AI isn't replacing human judgment with machine learning. It's amplifying human capability with intelligent automation. Airbnb's Automation Platform v2 shows what that looks like in practice.

Sources: Automation Platform v2: Improving Conversational AI at Airbnb, Task-Oriented Conversational AI in Airbnb Customer Support, LLM-Friendly Knowledge Representation for Customer Support (arXiv)