Text to SQL is solved. What's next?

Text to SQL is solved. Thanks to GPT-4, that debate is over. The real question now is what are we going to tackle next to ensure we maximize its value.

GPT-4: The Intelligence Revolution

GPT-4 is not just another incremental improvement; it's a paradigm shift. Consider these SQL generation examples based on example e-commerce data for Dot.

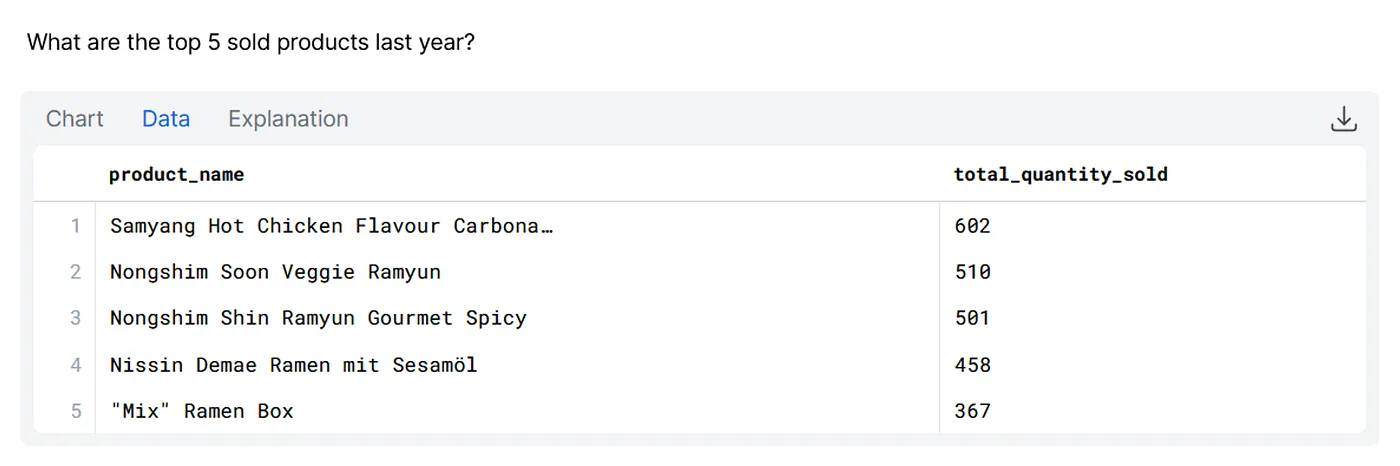

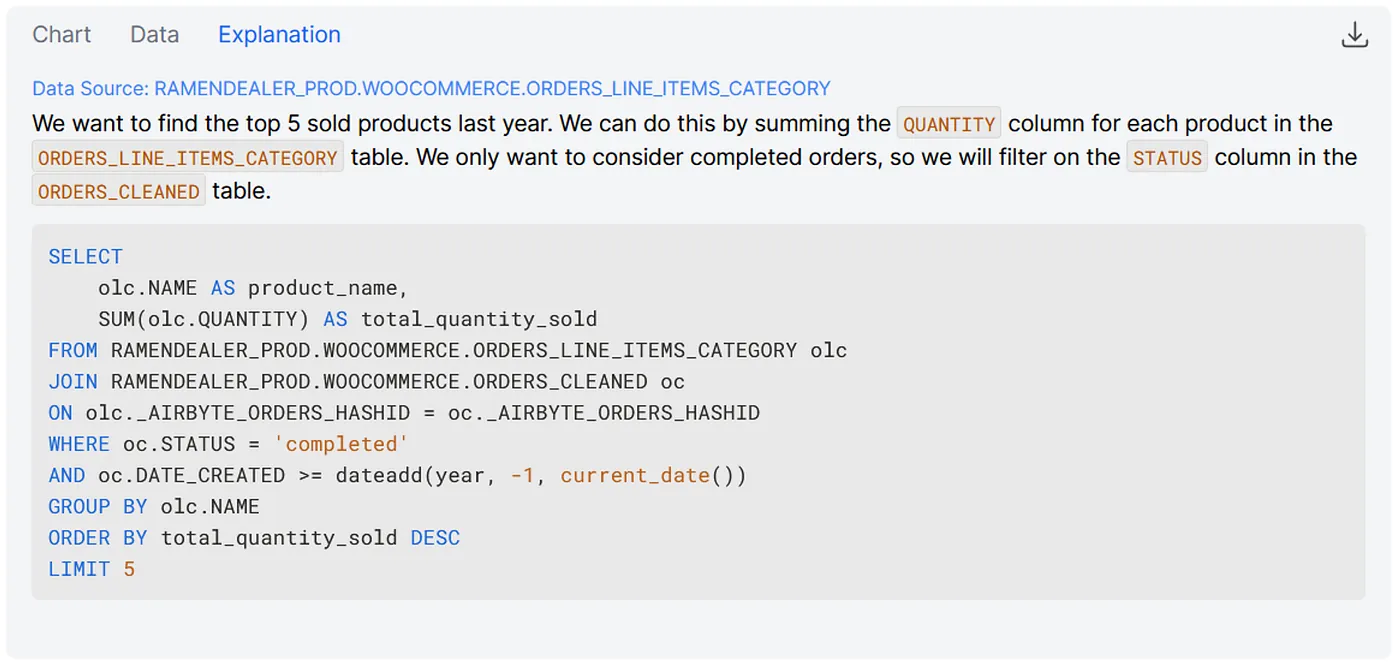

Example 1: What are the top 5 sold products last year?

To correctly answer this question, the agent needs to correctly:- map business meaning to columns- filter for the correct timeframe- correctly aggregate a measure- join 2 tables on the correct key- apply a relevant filter based on the meaning of the tables- order the calculated values- limit the result set

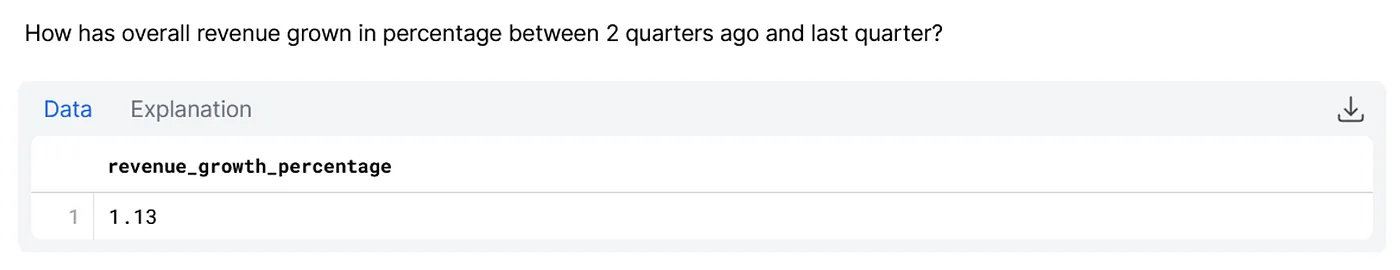

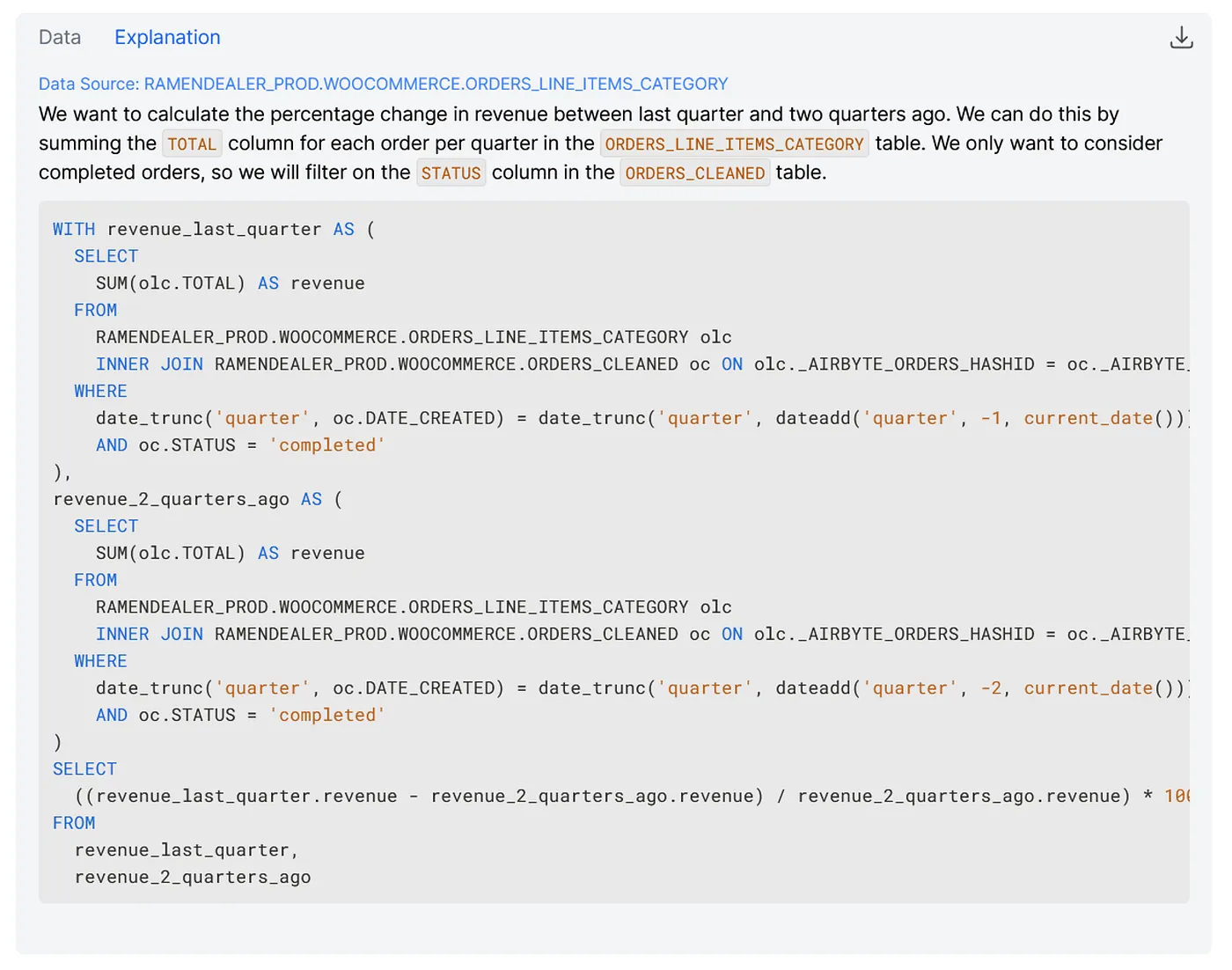

Example 2: How has overall revenue grown in percentage between 2 quarters ago and last quarter?

To correctly answer this question, the agent additionally needs to:- do multi-step calculations with CTEs- aggregate by the right time dimension- use the correct formula to calculate percentage difference

These aren't just neat tricks; they're transformative capabilities.

Now, these aren't just anecdotes. On academic benchmarks for Text-2-SQL GPT-4 based approaches are blowing away more complex and specialized ones.

Can we now just plug GPT-4 on our database and expect valuable and correct insights? No.

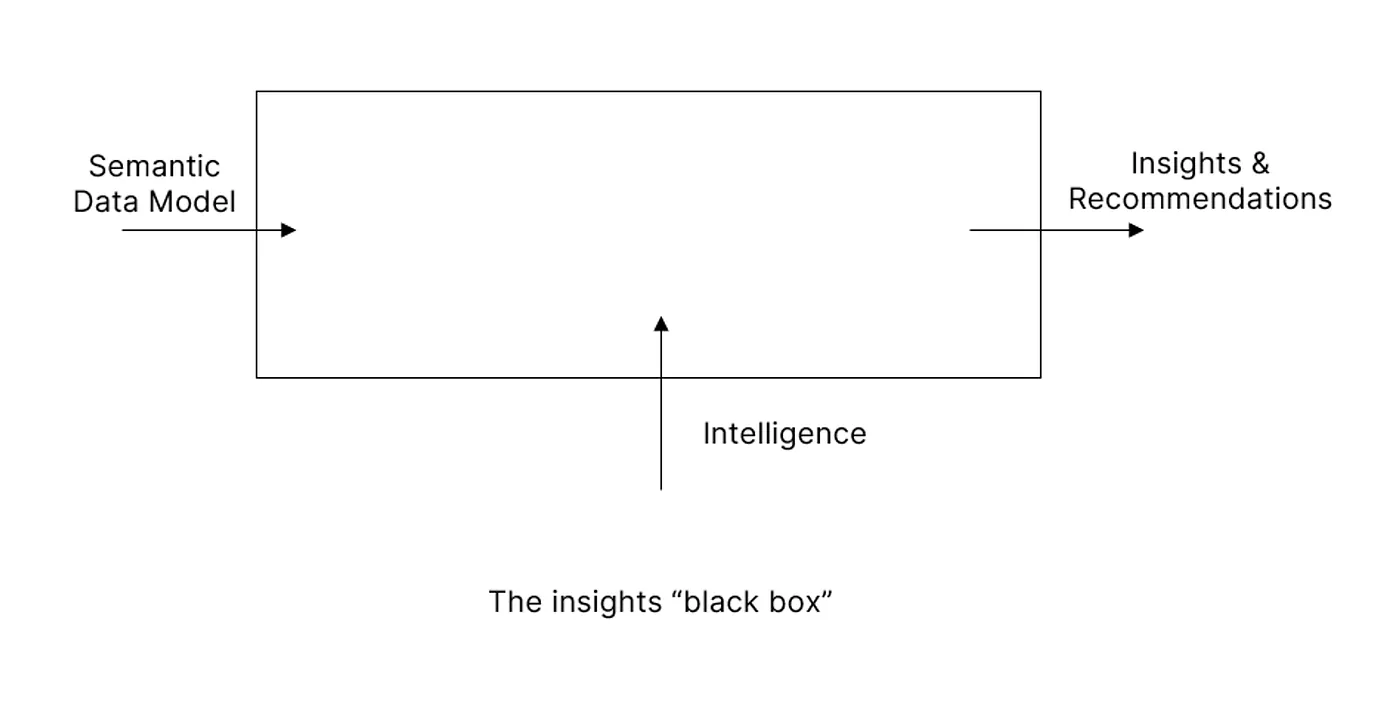

Great Output Requires Good Input

If you're not starting with a robust data model, you're setting yourself up to fail. This isn't just a suggestion; it's a mandate. Andy Grove (former CEO of Intel) made it clear: quality of output is determined by quality of input. And in the world of data that means clean data models and managed data quality (a.k.a. data products).

Next Challenges

1. Capture Implicit Knowledge

In Enterprise context, the data model is a shifting target and the knowledge how to use it is in the heads of the people using it. It's implicit knowledge, not written down anywhere.

LLMs often don't have enough context and information available to adhoc come up with the right interpretation and query, without proper "training / knowledge curation".

So, the big next challenge is building up a curriculum and knowledge base that allows LLM to get up to speed, almost like a new colleague.

2. Automation of Data Engineering and Semantic Data Models

In a similar vein, the engineering of the data model or semantic models (like LookML, dbt Semantic Layer, or dotML) should be automated. Data pipelines are getting more and more complex with 100 or 1000s of managed objects and an increasing amount of connected systems.

Ideally we can just say: I want to track the 10 most important metrics for my B2B SaaS business, the data comes from Salesforce. Do the rest. We are still really far from that, but it doesn't seem completely crazy to imagine this will also be solved in the next 10 years.

3. Visualizations, Interpretation and Actions

There is a ton of upside in making the output layer of analytics more intelligent. How much time is spent editing colors and chart configurations with your favorite Tableau dashboard that in 4/5 cases will never get looked at again.

And we don't just want visualizations; we want interpretation. What does the data mean for our business? What actions should be taken? and maybe even trigger some of these actions.

The CEO of the future is an AI.

Conclusion: Test AI & Invest in Good Information

We've shown that Text to SQL is no longer the frontier — thanks to GPT-4, we've conquered that mountain. But looking forward, here are the immediate actions we must take to ensure this technology fully matures:

Test AI Agents: Don't take my word for it; test GPT-4 and future iterations. See how they handle your specific needs and use cases. AI agents aren't a theoretical solution; they're practical tools that are here today, awaiting your evaluation.

Invest in Fundamentals: It's not glamorous, but excellence rarely is. Quality data and robust data models are the bedrock upon which all these advances rest. We've got to get our hands dirty, improve data quality, and build models that stand up to scrutiny.

By taking these actions, we're not passively awaiting the future; we're actively shaping it. And that is the key to realizing the untapped potential of Text to SQL and, by extension, GPT-4.

Your next move is clear. Start testing and start investing. Because the future isn't something that just happens; it's something we make together.

Related reading:

If you want to see how far your data models takes you, try Dot for free.

Rick Radewagen

Rick is a co-founder of Dot, on a mission to make data accessible to everyone. When he's not building AI-powered analytics, you'll find him obsessing over well-arranged pixels and surprising himself by learning new languages.